Contents

- Who is Sergey Pronin?

- What is your current role?

- I know you have an experimental culture, you probably tried different approaches, what went well and what failed during this process?

- Can you tell me about your top failures and fixes?

- Why would anyone want to go beyond bare-cloud and Dockerize their apps? It looks like a complex process, what’s the benefit?

- You are a manager and there should also be a man/hour saving side of it, with the high-density approach, can you manage this huge load with a smaller sized team?

- Can you give me some dollar figures on how much you are saving with this new architecture and technology choices?

Who is Sergey Pronin?

Sergey Pronin, SaaSOps Software Engineering Manager, started investigating how computers work at the age of 7 and was coding by the time he was 10. He graduated from Moscow Technical University, specializing in information security and networking and began his career working as a network engineer focusing on building infrastructures that were highly resilient to failure. He currently leads two teams at Aurea; Center Docker and Central Support. Both teams build the ship for containers for parent company ESW Capital, which resources all its businesses exclusively using top talent from Crossover.

What is your current role?

My journey started a year ago. Aurea was growing rapidly and a decision was made to build a central repository to host all docker containers of all companies and products owned by ESW Capital. There are two parts: Central Docker and Central Database (DB). Central Docker was the most challenging as Aurea aimed to do something never done before by taking a high-density approach and putting thousands of containers on a single instance. When we attended DOCKERCON and raised the question of how we could run hundreds of containers on a single instance, everybody thought we were insane. But that is what we do in Central, insane stuff that nobody else is doing and solving issues along the way. This revolutionary thinking is driven by top management at ESW Capital, Aurea and Crossover who constantly push the boundaries of what is possible.

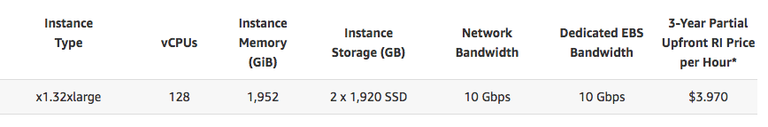

The outcome is that we currently host 2500 containers in Central on a standalone docker in 8 x1.32xlarge instances which is the biggest AWS (Amazon Web Services)has to offer. We also run a Kubernetes cluster — 4 instances and 1.4K ports — also insane because the global Kubernetes community suggests running 100 ports per instance max and we’re running at least 300 per instance.

I know you have an experimental culture, you probably tried different approaches, what went well and what failed during this process?

Well, in the beginning, nothing worked, but over time we matured and learned a lot about Docker and Docker matured, introducing a lot of bug fixes and improvements. What didn’t work was that we started without any high availability or scheduler — the management decision was to keep things simple. We ran simple Vanilla Docker exposing Docker API with the goal of maintaining availability at 99.9% in parallel. It was really challenging and everyone was complaining about our uptime. However, every experiment we ran brought valuable knowledge to us and we stuck to the principle of keeping things simple. If we introduced a feature and it didn’t give us a 10x increase in performance we rejected it.

Can you tell me about your top failures and fixes?

We had a severe failure related to storage as we were running Vanilla Docker hosts and Dockerizing legacy apps. Basically, we were storing databases (because we run databases on docker hosts as well) on EBS volumes (Elastic Block Store) on docker hosts. It became difficult to scale EBS due to volume, IOPS (input/output operations per second) or bandwidth limits and we opted for LVM (logical volume management). We introduced linear LVM which allows you to consistently write data on one volume even if you have many of them. Initially, it worked but then we started to face issues as the last volume became a bottleneck for performance. The decision was then made to go with Striped LVM which allows you to spread your data across multiple volumes simultaneously eliminating bottlenecks. This worked for a while too. However, the problem on Docker hosts is the volume of data, 40–60 TBs on some hosts, and the time it takes to migrate. In addition, once you’ve migrated from Linear LVM to Striped LVM there’s no way back. We did the migration and faced severe performance degradation which caused a lot of products to fail — it was almost 2 weeks of hell for us.

The fix was quite simple in the end. We asked ourselves, “Ok, we’re failing on storage, how do we solve this quickly so we don’t have to worry about it for some time until we find a permanent solution.” explains Sergey. We decided to over provision our storage with huge IO volumes and instead of using LVM go back to using single volumes. The difference now was that this high volume would now have high IO and we could smartly re-balance the load between them over time. And it worked, our storage was simpler and it is still working now. We were spending more but for the sake of stability, it was a good trade-off.

Why would anyone want to go beyond bare-cloud and Dockerize their apps? It looks like a complex process, what’s the benefit?

The main benefit is simplicity and standardization. In our case as the Central team, we’re building the platform — the cargo ship. Other teams, for example, the centralization factory, help applications to move to the Central platform. Imagine running 70 different software companies and adding one new company per week. We take each new company, analyze what they already have, Dockerizie their products, standardize their CI/CD pipeline and monitoring, and deploy them on our Central platform.

SaaS Ops team can easily redeploy or deploy newer layers for any product they want because we have a standard CI/CD. It allows us to scale very quickly. For startups, Docker should be the gold standard for fast growth, but for enterprises, it might be a bit different. We’re getting cost benefits because we’re using a high-density approach and we’re standardizing everything.

You are a manager and there should also be a man/hour saving side of it, with the high-density approach, can you manage this huge load with a smaller sized team?

Yes, this is actually key to the Crossover model. Crossover is responsible for finding and hiring top talent for over 70 software companies owned by ESW Capital and we are able to achieve economies of scale. Instead of running 70 engineering teams for all the companies we own, we run a single engineering team which can deploy and release all products through a standardized CI/CD pipeline. In terms of managing the infrastructure, you have fewer hosts which are running a lot of containers. If you keep things extremely simple you can explain in 5 minutes “how this thing works” and have a 4 people team manage a huge infrastructure running a few thousand containers.

There is one other key thing — we currently run Kubernetes cluster and this brings a lot of complexity. It’s not like Vanilla Docker — it’s like small pieces of software you need to manage. While running this high-density Vanilla Docker host, adding a new host resulted in complexity growth as we have to manage this host as well. Vanilla Docker doesn’t have any high availability, has its own storage, and networking and needs to be looked after. With Kubernetes, even if you’re running hundreds of different hosts your complexity still stays the same because Kubernetes hides it from you. It manages storage, the mobility of containers, and deploying from one node to another. At the same time, it’s complex- Kubernetes is a learned, long-term investment for us. At first, it looked like an extremely complex solution and we needed to spend a lot of time on it. Almost 80% of our team is now focused on Kubernetes. As a long-term result, it will give us a lot of benefits and we can handle the whole cluster with only 2 people. It’s a smart strategic decision.

Can you give me some dollar figures on how much you are saving with this new architecture and technology choices?

In Q4 2017, our yearly AWS costs dropped to $6M from $13M, but our goal is to get down to $5M by the end of Q3 2018. This may sound aggressive but it’s doable. Again, our high-density approach suggests that we run these huge x1.32xlarge instances and we run them on reservations. There are two aspects; firstly, by running these on reservations you’re already saving around 68 %. Secondly, using this high-density approach you’re running huge instances and everything that was previously running on small instances actually costs a lot more. In an ideal world this should give around 90% cost reduction on your AWS costs but in reality, it gives around 60% or 70% which is very acceptable.

What is your advice for Docker ready infrastructures for both Small Businesses and Enterprises?

My main advice would be to keep things simple. Do not over-complicate and follow the best processes available. In our case, we Dockerize all legacy applications and take them out of Amazon instances, putting them on to our Docker hosts. We don’t care if these apps are stateless or stateful. We just take them and move them to Docker as is. But if you’re starting from scratch and if you’re a startup think through the architecture, use the 12-factor application methodology, and think about it from scratch — your application should be aligned with your infrastructure. If you don’t do it in the beginning, you’ll face unnecessary challenges that you could have avoided. For Enterprises, it’s actually almost the same except that you have the ability and resources to experiment, trialling things or building a team who is responsible from batching Docker as we do.

So, everything that is running on Docker should follow some best guidelines, but there are of course cases where you simply can’t. In these cases, be ready to patch things and tweak things and spend your time in the Github community to find suitable solutions. Even though you believe this thing is not working at the moment, it may be your golden solution. If you’re not going to patch it, you’ll miss a 10x improvement for your infrastructure. Bottomline is, keep things as simple as possible but don’t be afraid of doing some crazy patches. Expose yourself to new challenges and leave your comfort zone — one of these experiments could be the solution you’ve been looking for.

If you’d like to the opportunity to work with the best talent on the most progressive projects, apply for one of our available positions at crossover.com/jobs

Interview conducted by Sinan Ata, founder of Remote Tips and Director of Local Operations at Crossover.